Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

Welcome to my professional portfolio, showcasing a suite of innovative AI and ML tools designed to push boundaries and empower developers and researchers alike.From GlitchGuru, an 8-bit animated AI assistant combining RAG document intelligence with nostalgic visuals, to the BitNet Hybrid Orchestrator, an edge-ready framework for efficient, secure AI orchestration with integrated safety, my focus is on performance and robust solutions.Dive into SmolLM Fine-Tuner for accessible, memory-optimized fine-tuning of small language models on platforms like Google Colab, or rapidly generate diverse, temporary SQL test data with RogueDB, complete with an interactive GUI and API.My projects are built to democratize advanced AI, streamline workflows, and offer unique, engaging experiences, bridging the gap between cutting-edge technology and practical, user-friendly applications. Explore my work and see how I'm crafting the future of intelligent systems.If you're interested in collaboration or have any questions, please visit my contact page to get in touch!

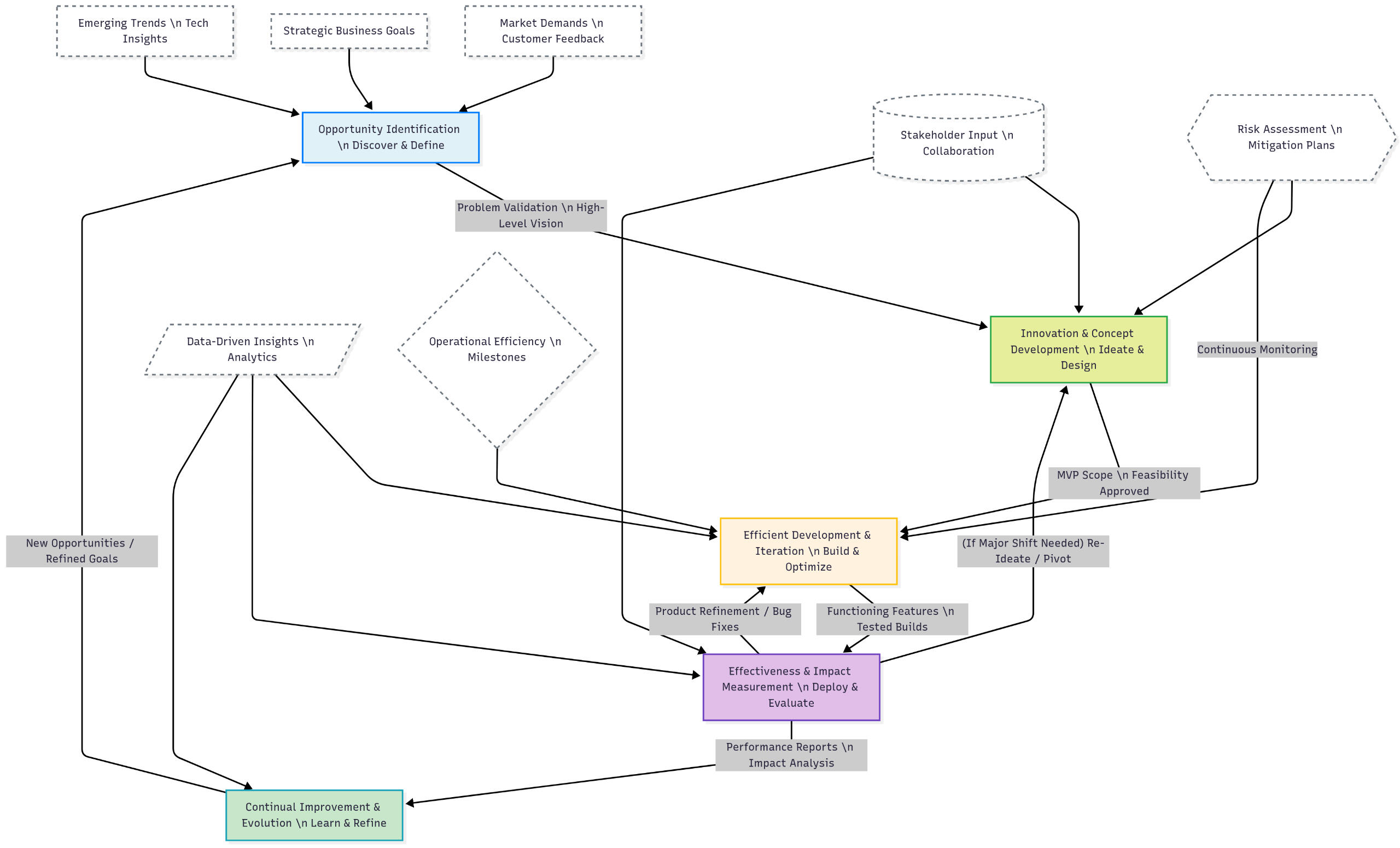

My approach to technology development is rooted in a dynamic lifecycle focused on continual innovation, efficiency, and effectiveness. It begins with identifying opportunities, actively seeking emerging trends and unmet needs to spark groundbreaking ideas.These innovations are then meticulously developed through a process of continual improvement, relentlessly optimizing workflows and resources to achieve peak efficiency.As we refine and iterate, the goal is always to become more effective, ensuring our solutions not only perform optimally but also deliver maximum impact.This cyclical process from insight to iteration to impact drives us to consistently deliver high-value, cutting-edge solutions that solve real-world problems.

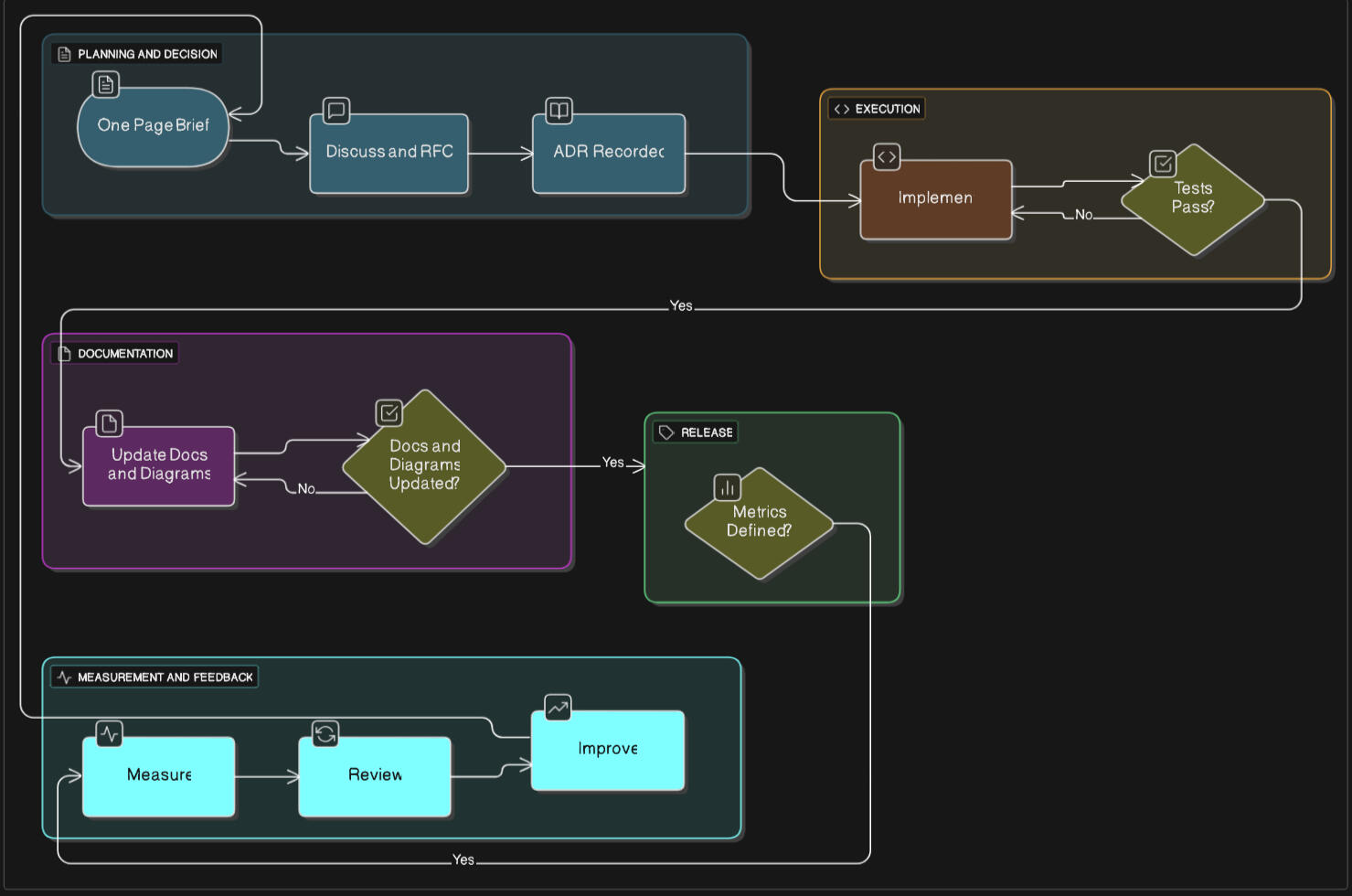

🧠 Communication • Documentation • Continuous Improvement (CDCI)I make complex systems easy to understand, operate, and improve. CDCI keeps docs and diagrams versioned with the code, decisions traceable, and changes measured so each release gets a little better.How I work- Single source of truth: Docs live in-repo; diagrams-as-code (Mermaid/PlantUML).

Small, reviewable changes: Brief → ADR → PR with code + docs together.- Quality gates: Diagrams compile, runbooks updated, release notes + metrics defined.- Measure & learn: Track latency, cost, uptime/MTTR, incidents, doc freshness, lead time.Core artifacts

Briefs • C4/sequence diagrams • ADRs • Runbooks • RFCs • Release notes • Post-mortems • Metrics notes

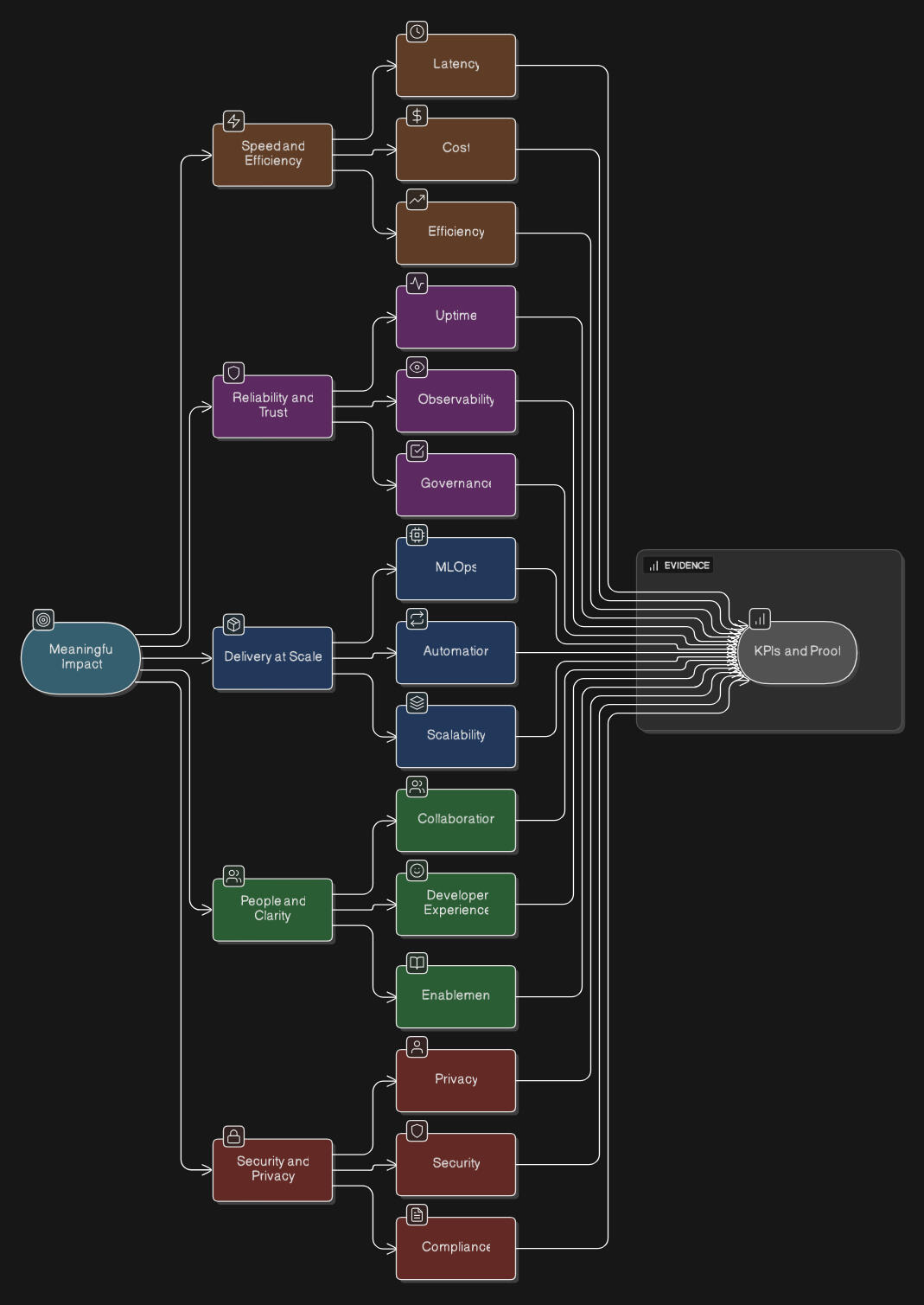

📏 Meaningful Impact

I focus on outcomes you can feel and measure. Five pillars guide how I deliver and prove value.Speed & Efficiency

SLM-first pipelines and lean orchestration keep latency low and costs predictable.

Latency • Cost • EfficiencyReliability & Trust

Self-healing workflows, monitoring, and guardrails make systems dependable and auditable.

Uptime • Observability • GovernanceDelivery at Scale

From prototype to production with CI/CD and testing so teams keep shipping, safely.

MLOps • Automation • ScalabilityPeople & Clarity

Human-centered design, enablement, and docs so teammates do their best work.

Collaboration • DX • EnablementSecurity & Privacy

Privacy-by-design, encryption, and data anonymization keep users and IP protected.

Privacy • Security • Compliance

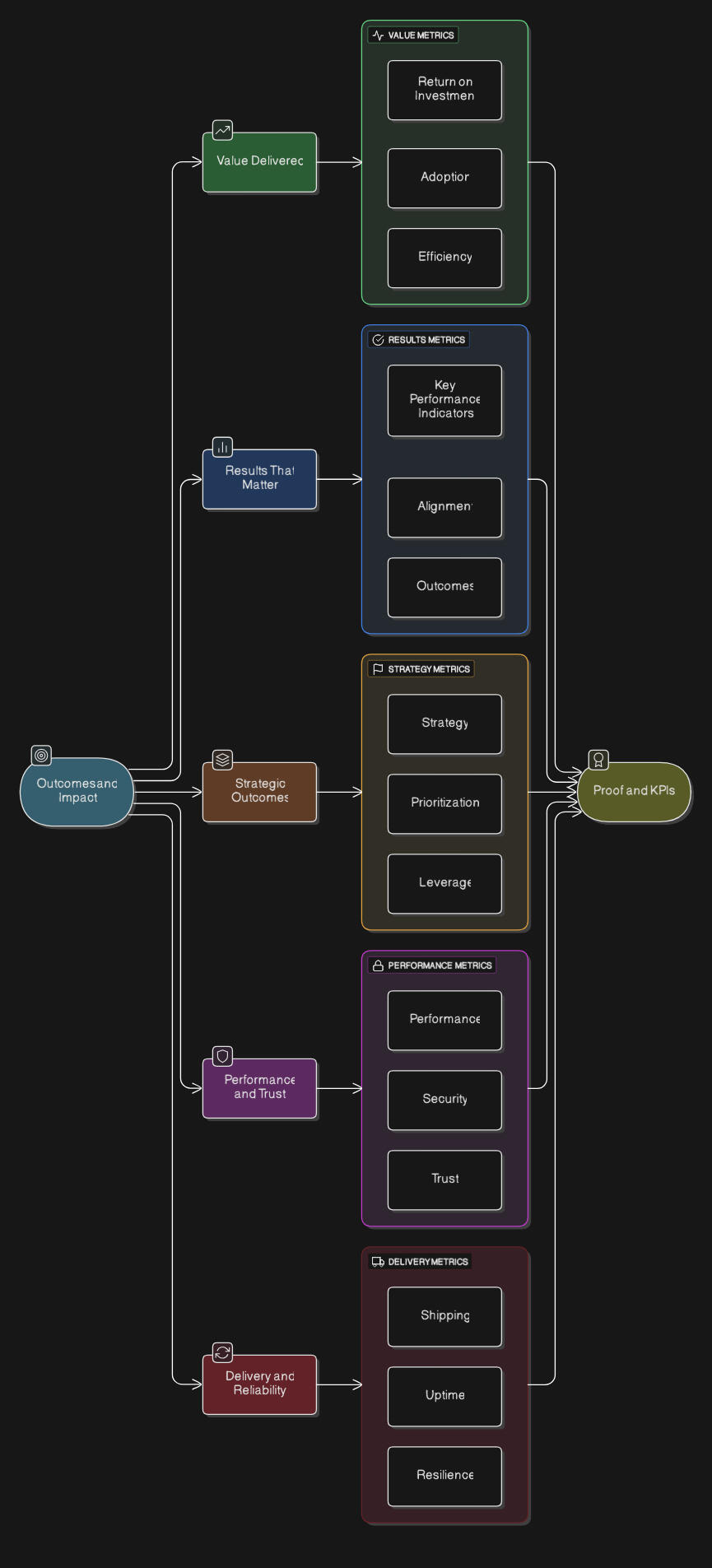

🔄 Outcomes & ImpactMeasurable Value

Ship increments tied to clear metrics and before→after deltas.

ROI • Adoption • EfficiencyKPI Alignment

Map OKRs to technical KPIs and prioritize what moves them.

KPIs • Alignment • OutcomesStrategic Leverage

Roadmaps and trade-offs that compound value over time.

Strategy • Prioritization • LeveragePerformance & Security

Define SLIs/SLOs; build in speed, safety, and least privilege.

Latency • Reliability • SecurityReliable Delivery

CI/CD, feature flags, canaries, and safe automatic rollbacks.

Shipping • Uptime • ResilienceVisibility & Reporting

Dashboards and release notes that show impact in plain language.

Evidence • Transparency • AccountabilityContinuous Improvement

Track MTTR, change failure rate, and lead time—improve each sprint.

Velocity • Quality • Learning

✋ Tangible Results

I deliver practical wins you can measure—fast paths to value, reliable systems, and clear enablement.Speed & Efficiency

SLM-first routing and lean orchestration keep latency low and costs predictable.

Latency • Cost • EfficiencyReliability & Trust

Self-healing pipelines, proactive monitoring, and guardrails make systems dependable and auditable.

Uptime • Observability • GovernanceDelivery at Scale

From prototype to production with CI/CD, testing, and safe rollouts—teams keep shipping.

MLOps • Automation • ScalabilityPeople & Clarity

Human-centered design, enablement, and clear docs so teams do their best work.

Collaboration • DX • EnablementSecurity & Privacy

Privacy-by-design, encryption, and anonymization keep users and IP protected.

Privacy • Security • Compliance

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

Mission and Vision

My Mission

Build human-centered systems—especially AI—that simply work: fast, reliable, safe, and measurable. I turn complex problems into clear, auditable products using SLM-first orchestration, rigorous MLOps, and privacy-by-design so teams ship faster with confidence and lower cost.My Vision

Make trustworthy technology as dependable and accessible as electricity—small, efficient components orchestrated thoughtfully, with governance and documentation built in. I want to shorten the path from idea to impact, raise the bar on reliability and ethics, and leave ladders behind so more people can build what’s next.

Here’s how I plan to innovate beyond the AI/SLM space across the full stack of people, product, and platform:Beyond SLMs: Where I Innovate1) Human-Centered UX & Interaction

Make advanced systems feel simple: clear flows, assistive micro-copy, diagrams you can act on, and interfaces that meet people where they are (chat, dashboards, mobile, and lightweight 2D/retro UIs).

Clarity • Accessibility • Adoption2) MLOps & Platform Reliability

Treat reliability as a feature: reproducible pipelines, zero-downtime deploys, self-healing orchestration, and policy-based rollbacks so teams can ship without fear.

CI/CD • Orchestration • SLOs3) Data Governance & Privacy-by-Design

Bake in consent, minimization, and anonymization; establish lineage, quality checks, and retention rules so data is useful and trustworthy.

Lineage • Quality • Privacy4) Trust, Safety & Compliance

Operationalize guardrails, audit trails, and model risk management; make explainability and incident response part of the product, not paperwork.

Governance • Explainability • Auditability5) Developer Experience (DX)

Golden paths, templates, and docs-as-code (CDCI) that reduce cognitive load: one-page briefs, ADRs, runnable examples, and “link from alert → runbook” patterns.

Velocity • Consistency • Onboarding6) Open Standards & Interoperability

Design for plug-and-play: clean APIs, event schemas, SBOMs, and license-sane components (MIT/Apache-style) so teams can extend safely without vendor lock-in.

APIs • Standards • Supply Chain7) Edge, Offline-First & Resilience

Push intelligence closer to where it’s needed: low-footprint runtimes, cached decisioning, and graceful degradation when the network is flaky.

Latency • Resilience • Cost8) Economics & Sustainability

Engineer for performance-per-dollar and energy-per-result: workload autoscaling, smart batching, and right-sizing that make capability affordable and responsible.

Cost • Efficiency • Footprint9) Security & Software Supply Chain

Shift-left security with signed artifacts, secrets hygiene, least-privilege defaults, and continuous scanning—security that’s enforced by tooling, not hope.

Integrity • Least Privilege • Hardening10) Education, Enablement & Community

Reusable playbooks, workshops, and internal “how-to” kits that turn users into contributors and contributors into maintainers.

Knowledge • Uplift • Scale11) Product & Business Model Design

Package capability so it’s easy to buy, adopt, and prove value: clear SLAs, ROI dashboards, and pricing aligned to outcomes—not hype.

Simplicity • Outcomes • FitSLMs are one tool in my kit. My larger goal is to build an operating system for delivering trustworthy technology—where people understand it, teams can run it, leaders can trust it, and the value compounds over time.

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

Projects

Welcome to our collective projects. Here, we're building the infrastructure for autonomous AI, one project at a time. Each initiative below represents a core pillar of our mission: to create intelligent systems that can learn, adapt, and evolve. From ultra-efficient models to autonomous development lifecycles, our work is driven by a commitment to collaborative excellence and strategic innovation.PLEASE NOTE WE ARE WORKING ON MIGRATING FOR REPOSITORIES SO SOME LINKS MAY NOT WORK

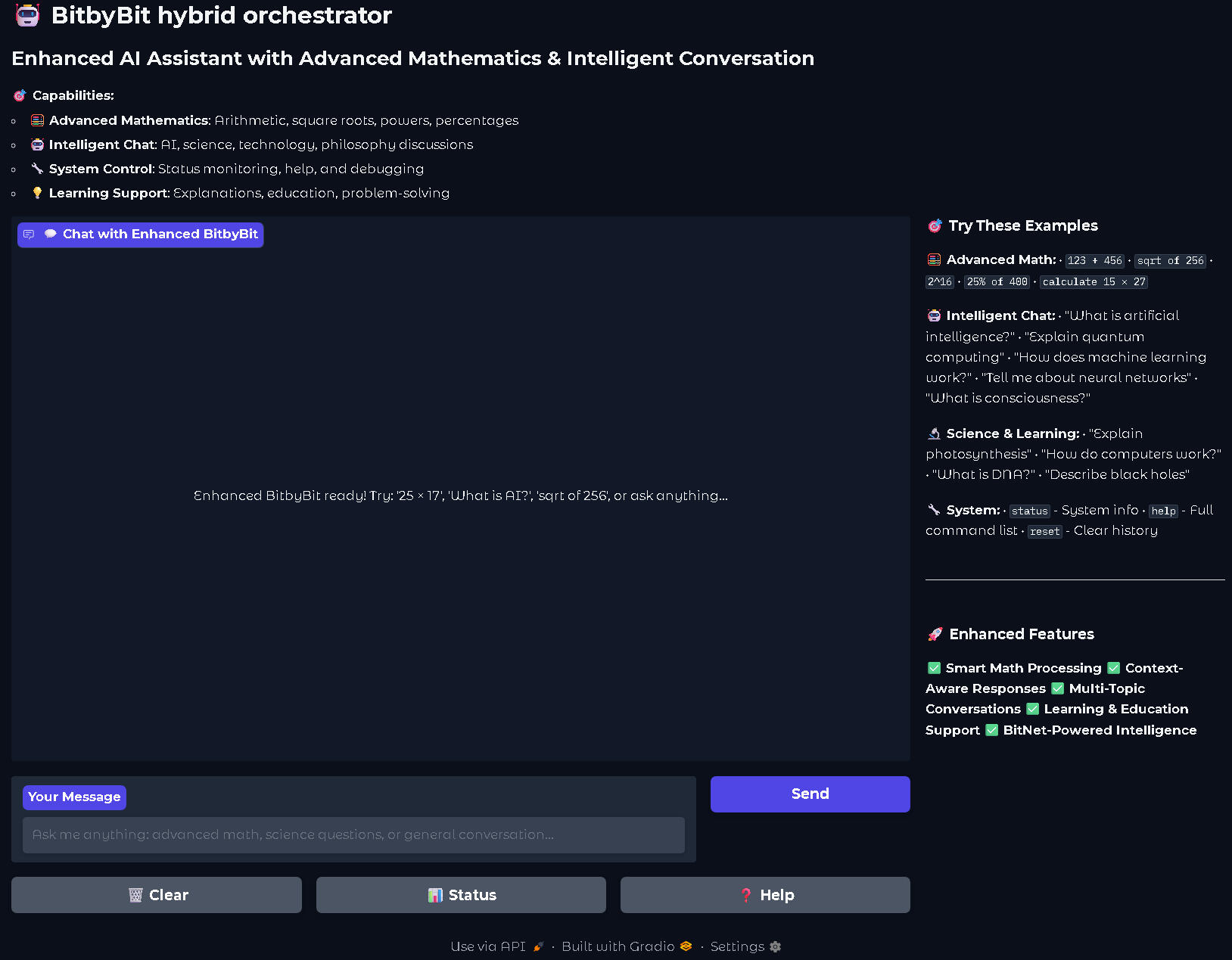

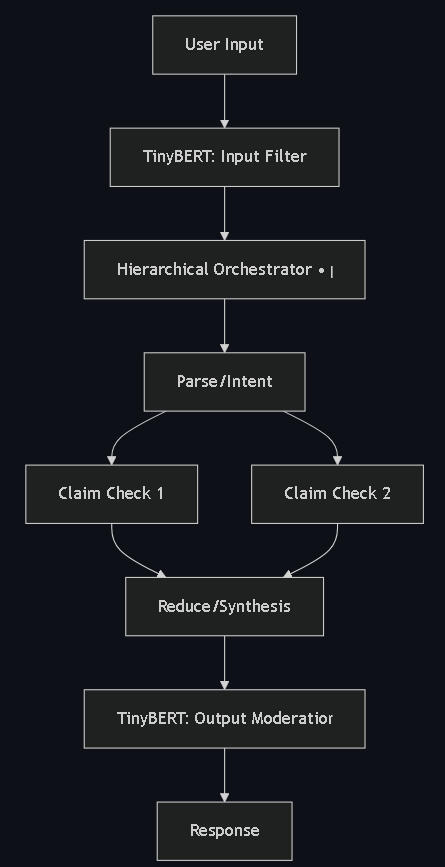

The BitNet Hybrid Orchestrator is a compact engine designed for orchestrating tasks using hierarchical, parallel, and sequential patterns.It leverages BitNet agents for core reasoning tasks like text processing and summarization, and incorporates TinyBERT as a dual-layer safeguard for input filtering, per-node gates, and output moderation, including PII redaction and toxicity checks.This system is specifically built for efficient operation on edge devices such as phones and SBCs, and it provides a Colab demo, YAML-to-DAG pipeline configuration, documentation, and a multi-turn chat UI.

The SmolLM Fine-Tuner is a comprehensive, production-ready system designed for fine-tuning small language models, particularly the SmolLM series, on Google Colab's free tier. It democratizes AI fine-tuning through memory-optimized training and LoRA integration, which significantly reduces trainable parameters while maintaining performance. The system offers multi-metric evaluation (ROUGE, BLEU, BERTScore, perplexity), an intuitive Gradio web UI, and automatic results submission to Google Sheets for leaderboard tracking. It supports various small models and datasets, providing a user-friendly and efficient workflow for fine-tuning.

GlitchGuru is a retro-styled AI assistant that combines modern RAG document intelligence with nostalgic 8-bit animations. It processes documents using multi-LLM support and a RAG engine (ChromaDB + Sentence Transformers), then visualizes the AI's thinking, processing, and responding through charming pixel art animations via a Lua-based engine. Designed for versatility, GlitchGuru is available as a Google Colab web version and a standalone desktop application, offering an engaging way to interact with document intelligence.

RogueDB is a Python-based toolkit for quickly generating temporary, synthetic SQLite databases filled with diverse test data, including math, English, logic, and financial information. It's designed for application testing, prototyping, educational use, and API development, offering an interactive GUI and a FastAPI for programmatic access. Optimized for Google Colab, it allows for instant setup and interaction with fresh databases via a public ngrok URL.

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

About me

My name is Shiy Sabiniano.Over the past decade, I’ve built my career around solving complex problems through engineering and solution design, working in close partnership with cross-functional teams.As a first-generation American, fueled by my mother's journey from the Philippines in pursuit of the American Dream, I am deeply committed to developing, implementing, and supporting systems that help people do their best work, ensuring they can be proactive and innovative.I specialize in building scalable, cloud-native, AI-driven platforms using Python, Kubernetes, Jenkins, and Docker.A core part of my approach is an SLM-first (Small Language Model First) architecture, treating SLMs as Minimal Viable Products (MVPs) and proofs of concept, particularly for edge devices that are built with efficiency in mind that can scale up to meet the demand.I build SLM-first orchestration pipelines where compact specialist models serve as MVP/POCs at the edge and level-up to production through staged evaluation. Multiple agents each produce a probability for the possible answers; we then take a weighted vote—think of it as giving more trusted specialists a louder voice—and select the answer with the highest combined support. Before that vote, we re-calibrate confidences so that a “70% sure” output actually behaves like 70% over time; this avoids overconfident small models. A risk gate then decides the path: if the predicted impact is low and confidence is genuinely high, we keep the request on the small-model path; if either risk is high or uncertainty is elevated, we escalate to a heavier checker or a human. In practice, that might look like SmolLM2-1.7B handling fast, routine prompts, while edge cases are kicked up to a larger evaluator.For governance and alignment, I pair rich observability with embedding-level guardrails. Concretely, a compact encoder (e.g., BERT-tiny or MiniLM-L6-v2) turns inputs and outputs into vectors, and we compare those vectors to a small library of “policy” vectors for safety, compliance, and tone. If the similarity crosses a chosen threshold, we flag or quarantine; if it stays comfortably below, we let it pass. We also watch for statistical drift by comparing the current distribution of outputs to the recent past; if today’s behavior deviates more than our tolerance, we halt or roll back. Before any merge or release, a fixed test harness must hold green—precision/recall on known sets, latency and cost budgets, and targeted adversarial checks.Knowledge is refreshed on a schedule (daily or weekly), then distilled back into the small models using teacher-student training so the footprint stays tiny without losing accuracy. Here, a larger or more accurate “teacher” provides targets; the small model learns to match them closely on curated data.To make the whole thing empirical and auditable, we maintain a tamper-evident knowledge ledger. Every training or evaluation batch gets a content fingerprint; those fingerprints are chained together so any change would be obvious. We periodically anchor those commitments on-chain (lightweight L1/L2 checkpoints), attach signed model cards and provenance attestations, and store the heavy artifacts in content-addressed storage. When needed, we can prove that privacy and safety checks were run—without exposing the underlying data—using succinct attestations. The outcome is provable lineage for prompts, datasets, checkpoints, and decisions that stakeholders can verify independently.Beyond the technicals, I’ve led teams, mentored engineers, and partnered with stakeholders to turn these pipelines into measurable business value clear KPIs, canary rollouts, and short feedback loops to ship fast without sacrificing correctness.My expertise extends to understanding the communication of different protocols and programming in diverse languages, complemented by a deep knowledge of cryptography from my Certified Blockchain Architect and Expert certificationsThis allows me to build robust, versatile systems using a wide selection of toolsets, which is crucial for creating secure and resilient AI and cloud-native solutions.I’m motivated by practical, well-engineered systems that make a meaningful impact.I favor an SLM-first architecture with clear guardrails and self-healing infrastructure so products are faster, cheaper, and more trustworthy.The aspect of my work that brings me the most personal satisfaction is fostering innovation and maintaining a human-focused UX, ensuring technology simplifies jobs and enhances overall effectiveness and efficiency.Let's Innovate.

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

Knowledge base

Knowledge Base Coming soon

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

Contact

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

SmolLM Fine-Tuner: A Comprehensive and Accessible Framework for Efficient Fine-Tuning of Small Language Models

IntroductionThe proliferation of Large Language Models (LLMs) has ushered in new paradigms for artificial intelligence, yet their computational demands often limit accessibility, particularly for researchers and developers operating within resource-constrained environments. Fine-tuning these models for specific tasks is crucial for maximizing their utility. The SmolLM Fine-Tuner project addresses this challenge by providing a comprehensive, production-ready system specifically engineered for the efficient fine-tuning of small language models (SmolLM series and other classic variants) on readily available resources, such as Google Colab's free tier. This framework aims to democratize access to advanced AI fine-tuning by integrating memory-optimized training, parameter-efficient techniques, and multi-metric evaluation within an intuitive user interface.

Methodology and ArchitectureThe SmolLM Fine-Tuner is designed with a modular and optimized architecture to ensure high performance and accessibility:1. Memory-Aware Training: A core innovation of the system is its capability to automatically optimize training processes for environments with limited GPU memory (e.g., 12-15GB on Google Colab). This includes leveraging techniques such as mixed precision (FP16) for reduced memory footprint and gradient checkpointing to trade compute for memory efficiency. Real-time GPU and system memory monitoring further aid in efficient resource utilization.2. LoRA (Low-Rank Adaptation) Integration: To significantly reduce the number of trainable parameters and computational overhead during fine-tuning, the framework incorporates LoRA. This technique allows for highly parameter-efficient training, enabling performance comparable to full fine-tuning with substantially fewer resources. LoRA configuration parameters (rank, alpha, dropout) are fully customizable.3. Multi-Metric Evaluation Suite: Comprehensive model assessment is crucial for effective fine-tuning. The SmolLM Fine-Tuner integrates a robust evaluation suite that includes:* Text Generation Metrics: ROUGE and BLEU scores for assessing the quality of generated text.* Semantic Understanding Metrics: BERTScore for evaluating semantic similarity between generated and reference texts.* Language Modeling Metrics: Perplexity for gauging the fluency and predictability of the language model.The framework is designed to be extensible, allowing for the integration of custom evaluation metrics.4. Interactive User Interface (Gradio): Recognizing the need for accessibility for non-technical users, the system features a professional Gradio web UI. This interface provides a streamlined workflow for configuring models, datasets, and training parameters; monitoring real-time training progress (loss, metrics, memory usage); and conducting comprehensive evaluations.5. Results Tracking and Leaderboard Integration: To support experimental tracking and reproducibility, the system includes automated integration with Google Sheets for leaderboard updates, comprehensive experiment logging (training configurations and results), and performance visualization tools (training curves, metric comparisons).The overall architecture allows users to configure fine-tuning parameters via an interactive interface, upon which the system handles data loading, model initialization (supporting SmolLM2 series, GPT2, DistilGPT2, DialoGPT variants), memory optimization, training execution, and multi-metric evaluation, culminating in shareable results.

Key Features and ApplicationsSmolLM Fine-Tuner's robust feature set facilitates various applications:* Democratization of AI Fine-Tuning: By optimizing for free-tier cloud environments, the project makes advanced LLM fine-tuning accessible to a broader audience, including students, independent researchers, and developers with limited computational resources.* Efficient Model Adaptation: It enables rapid and resource-efficient adaptation of small language models to specific tasks or datasets, such as instruction-following (e.g., using Alpaca or Dolly 15k datasets) or specialized text generation.* Educational Tool: The intuitive interface and comprehensive evaluation metrics make it an excellent platform for learning about LLM fine-tuning, LoRA, and performance evaluation.* Rapid Prototyping and Experimentation: Developers can quickly iterate on different models, datasets, and fine-tuning parameters to validate hypotheses and build proofs-of-concept.* Benchmarking and Comparison: The multi-metric evaluation and leaderboard integration provide a standardized way to benchmark and compare the performance of different fine-tuned models.

ConclusionThe SmolLM Fine-Tuner project offers a significant contribution to the field of accessible AI by providing a highly optimized and user-friendly framework for fine-tuning small language models. Its emphasis on memory efficiency, parameter-efficient training with LoRA, comprehensive multi-metric evaluation, and an interactive Gradio interface democratizes access to sophisticated AI capabilities. This framework not only accelerates the development and deployment of tailored language models but also empowers a wider community to engage with and benefit from the advancements in modern artificial intelligence.

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

BitNet‑Hybrid‑Engine: Edge‑ready hybrid orchestration for small language models.

Blend hierarchical, parallel, and sequential execution in one engine. Use BitNet as the core reasoner for efficient on‑device inference, while TinyBERT runs dual‑layer safety at input and output (with optional per‑node gates). Deploy on laptops, SBCs, or lean VPS—and scale up when you need to.

# BitNet‑Hybrid‑EngineEdge‑ready hybrid orchestration for small language models.Blend hierarchical, parallel, and sequential execution in one engine. Use BitNet as the core reasoner for efficient on‑device inference, while TinyBERT runs dual‑layer safety at input and output (with optional per‑node gates). Deploy on laptops, SBCs, or lean VPS—and scale up when you need to.---

## Why BitNet‑Hybrid‑Engine?Modern AI stacks are heavy, expensive, and hard to govern. BitNet‑Hybrid‑Engine flips that script:* Efficient by design: BitNet‑style quantization keeps models fast on CPU and modest GPUs.

* Safe by default: TinyBERT guard handles moderation, jailbreak defense, and PII redaction.

* Composability without bloat: Mix orchestration patterns in a single DAG. No massive platform required.

* Edge‑first: Works offline or with constrained compute; perfect for field devices and privacy‑sensitive environments.## What it isBitNet‑Hybrid‑Engine is a compact orchestration system that executes a YAML → DAG pipeline. You define nodes (agents), dependencies, and guardrails; the engine plans and runs the flow with budget awareness (latency, memory, concurrency).Included:* Reasoning agents: text_processor, summarizer (swap in your BitNet runtime).

* Safety: TinyBERT‑based guard at input, optional per‑node gates, and output.

* UIs: CLI, Web UI (single‑turn + chat), and a Colab demo.

* Docs: Architecture, API, quickstart, roadmap.## How it works (high‑level)1. Input arrives → TinyBERT runs pre‑filters (toxicity, jailbreak, PII redaction).

2. Planner builds the DAG based on your YAML: some branches run in parallel, others wait on dependencies.

3. Agents run (BitNet reasoner by default), respecting latency/memory budgets.

4. Reducer synthesizes outputs, then post‑guard checks apply.

5. Response returns with logs/metrics.> Safety is a function, not an afterthought: you can wrap the whole flow or specific nodes.## Key capabilities* Hybrid orchestration: Hierarchical planning with parallel/sequential execution in one pipeline.

* Budget awareness: Configure latency_ms, max_concurrency, and memory_mb per pipeline.

* Swap‑in backends: Keep the agent signatures; bring your BitNet runtime (ONNX, bitnet.cpp, etc.).

* Privacy‑first: Optional PII redaction and content moderation at ingress and egress.

* Edge‑friendly: Lightweight deps and quantization for CPUs and small GPUs.

## Quickstart### 1) Colab (zero local setup)* Upload the notebook from this repo: notebooks/bitnet-hybrid-engine.ipynb.

* Or mirror to GitHub to use the Colab badge directly.### 2) Local (Python 3.10+)bash# Tiny CLI demo

git clone https://gitea.com/Shiy-S/BitNet-Hybrid-Engine.git

cd BitNet-Hybrid-Engine

python -m venv .venv && source .venv/bin/activate # Windows: . .\.venv\Scripts\Activate.ps1

pip install -r orchestrator/requirements.txt

python orchestrator/cli.py --input "Contact [email protected]. BitNet b1.58 ... TinyBERT ..."# Full app (Gradio UI + agents + guards)

python standalone/bitnet_hybrid_engine_standalone.py # http://127.0.0.1:7860

---## Pipeline as dataDefine your flow in YAML; the engine turns it into an execution DAG.yaml- id: analyze_text

name: summarize_and_verify

budgets: { latency_ms: 1800, max_concurrency: 2, memory_mb: 1200 }

models: { reasoner: distilbert-base-uncased (bitnet-quantized), guard: tinybert-onnx-int8 }

policies:

thresholds: { toxicity_block: 0.5, pii_redact: 0.7, jailbreak_block: 0.6 }

nodes:

- id: clean_text

agent: text_processor

guard_pre: true

guard_post: true

params: { operation: clean }

agent: text_processor

deps: [clean_text]

params: { operation: sentiment }- id: summarize_text

agent: summarizer

deps: [clean_text]

params: { max_length: 200 }- id: claim1

agent: text_processor

deps: [clean_text]

params: { operation: entities }- id: claim2

agent: text_processor

deps: [clean_text]

params: { operation: language }- id: reduce

agent: summarizer

deps: [claim1, claim2]

params: { max_length: 120 }

---## Who is it for?* Builders of local/edge apps who need efficient, safe LLM workflows.

* Teams in regulated environments needing moderation and redaction baked in.

* Researchers & tinkerers validating BitNet/TinyBERT stacks on commodity hardware.---## Security & governance* Dual‑layer guard: TinyBERT at ingress and egress; optional per‑node gates.

* PII redaction hooks: Plug custom redactors or use defaults.

* Metrics & logs: Observe what ran, where, and how long.

* Responsible use: See SECURITY.md for reporting and best practices.---## Licensing & compliance* Code is AGPL‑3.0‑or‑later.

* If you host it over a network, AGPL §13 requires offering users the Corresponding Source of your modifications (link or endpoint pointing to the running commit). See COMPLIANCE.md for drop‑in headers/footers.

* Models and third‑party libs may have different licenses. Track them in THIRD_PARTY_LICENSES.md.---## Roadmap (snapshot)* Blueprint Foundation — ✅ Core scheduler, config‑as‑data, safety layer, docs.

* Section 1 (Core Intelligence) — 🟨 Skeleton agents with swap points for real BitNet backends.

* Section 2 (Learning Loop) — ⏳ Hooks for dataset curation, eval harness, fine‑tuning.

* Section 3 (Autonomous Logic) — 🟨 Planning heuristics, retries/fallbacks, budget routing.

* Section 4 (UI) — ✅ CLI + Web UI (single‑turn & multi‑turn chat); Colab demo.---## Frequently

Key Features and ApplicationsSmolLM Fine-Tuner's robust feature set facilitates various applications:* Democratization of AI Fine-Tuning: By optimizing for free-tier cloud environments, the project makes advanced LLM fine-tuning accessible to a broader audience, including students, independent researchers, and developers with limited computational resources.* Efficient Model Adaptation: It enables rapid and resource-efficient adaptation of small language models to specific tasks or datasets, such as instruction-following (e.g., using Alpaca or Dolly 15k datasets) or specialized text generation.* Educational Tool: The intuitive interface and comprehensive evaluation metrics make it an excellent platform for learning about LLM fine-tuning, LoRA, and performance evaluation.* Rapid Prototyping and Experimentation: Developers can quickly iterate on different models, datasets, and fine-tuning parameters to validate hypotheses and build proofs-of-concept.* Benchmarking and Comparison: The multi-metric evaluation and leaderboard integration provide a standardized way to benchmark and compare the performance of different fine-tuned models.

Thought for 18sConclusionBitNet-Hybrid-Engine brings modern orchestration to the edge—pairing BitNet’s efficiency with TinyBERT’s guardrails in a simple YAML→DAG flow. You get compact, composable pipelines that run safely on modest hardware and scale when needed. Start with the sample pipeline, swap in your backends, and ship with AGPL §13 transparency baked in.

Ready to give it a try?

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

GlitchGuru: A Novel Framework for Visually-Augmented Retrieval-Augmented Generation with Retro 8-bit Animation

IntroductionThe increasing sophistication of Large Language Models (LLMs) and Retrieval-Augmented Generation (RAG) systems has propelled advancements in artificial intelligence, yet the interpretability and user engagement of these complex systems remain areas of active research. GlitchGuru addresses these challenges by presenting a distinctive framework that integrates RAG-powered document intelligence with an 8-bit animated visualization system. This approach aims to enhance user understanding and interaction with AI by providing real-time, retro-styled visual feedback on the AI's internal processes, thereby bridging the gap between advanced computational tasks and intuitive user experience.

Methodology and ArchitectureGlitchGuru's architecture is meticulously designed to facilitate a seamless integration of modern AI capabilities with a nostalgic visual aesthetic. The system comprises several interconnected modules:1. Core AI Engine: This module provides multi-LLM support, allowing integration with various LLM APIs (e.g., OpenAI's GPT models) and offering flexibility in model selection. It serves as the primary reasoning component, processing user queries and generating responses.2. RAG Document Intelligence: Central to GlitchGuru's document processing capabilities, this engine leverages ChromaDB for vector storage and Sentence Transformers for embedding documents. It enables the system to retrieve relevant information from a predefined corpus (organized into "AnythingLLM-style Workspaces") to augment LLM responses, ensuring contextually rich and accurate outputs. The document processing pipeline involves:* Text Extraction: Handling various document formats (PDF, DOCX, TXT).* Vector Embedding: Converting extracted text into numerical representations suitable for semantic search.* Retrieval: Identifying and extracting pertinent document segments based on user queries.3. 8-bit Visualization System: This is GlitchGuru's most distinguishing feature. A Lua-based animation engine is employed to visualize the AI's operational states in real-time through charming pixel art animations. These states include:* Thinking: Represented by a pulsing brain animation with neural activity.* Writing: Depicted with a typewriter effect and a blinking cursor.* Processing: Illustrated by gear animations and progress bars.* RAG Processing: Visualized through a vector database animation.* Error: Signified by classic error symbols.This visualization layer provides immediate, intuitive feedback to the user, demystifying the AI's black-box operations.4. Workspace Manager: This component facilitates the organization and management of documents, akin to containerized document management systems. It enables users to define and interact with specific document collections for RAG operations.The operational flow begins with user input, which is routed through the Workspace Manager to the RAG Engine. The RAG Engine retrieves relevant information, which is then passed to the LLM Interface. The LLM processes the query and retrieved context, generating a response. Throughout these stages, the 8-bit Visualizer provides real-time animated feedback, culminating in an animated output to the user.

Key Features and ApplicationsGlitchGuru's unique combination of features lends itself to several compelling applications:* Enhanced User Engagement: The retro 8-bit animations create a novel and engaging user experience, making interaction with an AI assistant more dynamic and enjoyable.* Improved Transparency and Interpretability: By visually representing AI states such as "thinking" or "processing," GlitchGuru offers a degree of transparency into the AI's operation, potentially aiding user understanding of response generation.* Interactive Learning Tools: The platform can serve as an intuitive educational tool, allowing users to observe AI processes in real-time, which can be particularly beneficial for learning about RAG systems and LLM interactions.* Rapid Prototyping and Development: The multi-platform deployment (Google Colab and standalone desktop application) and customizable animation engine allow developers to quickly prototype and demonstrate AI systems with a unique visual flair.* Novel UI/UX for AI Applications: GlitchGuru demonstrates a new paradigm for user interfaces in AI, where visual cues and thematic aesthetics play a significant role in defining the interaction.

ConclusionGlitchGuru represents an innovative synthesis of cutting-edge AI (RAG, multi-LLM support) with a visually rich, retro-themed interface. By rendering the abstract processes of an AI assistant into charming 8-bit animations, it not only enhances user engagement but also contributes to a more intuitive understanding of complex AI operations. This framework holds significant promise for applications requiring an accessible, transparent, and highly engaging human-AI interaction paradigm, pushing the boundaries of how users perceive and interact with intelligent systems.

© 2025 Shiy Sabiniano

Solutions Engineer & AI Innovator

Human-centered AI innovator with solutions that simply work and drive meaningful impact

RogueDB: A Framework for On-Demand Synthetic SQL Database Generation for Testing and Educational Applications

IntroductionThe development and testing of software applications, particularly those interacting with relational databases, frequently necessitate access to diverse and representative datasets. However, obtaining or creating such data can be time-consuming, resource-intensive, and fraught with privacy concerns when using real-world information. RogueDB addresses this challenge by providing a Python-based toolkit for the rapid generation of temporary SQLite databases populated with structured, synthetic test data across various domains. This framework aims to streamline the development lifecycle for database-dependent applications, facilitate robust testing, and offer an accessible environment for SQL education and prototyping.

Methodology and ArchitectureRogueDB employs a modular architecture designed for efficient data generation and database management, integrated with a user-friendly interface. The core components include:1. Data Generation Module (datagenerators/generatedata.py): This module is responsible for producing synthetic data tailored to specific domains. It currently supports:- Mathematical Problems: Generation of simple arithmetic operations.- English Texts: Creation of sentences and paragraphs, potentially including basic Natural Language Processing (NLP) metrics.- Logical Statements: Construction of true/false, conditional, and deductive logic examples.- Financial Data: Simulation of realistic transactions (e.g., deposits, withdrawals, transfers) and associated account balances.2. Database Setup Module (databasesetup/createdb.py): This script orchestrates the creation and population of an SQLite database, typically named testdata.db. It leverages the data generation functions to create distinct tables for each data type. A key feature is its ability to selectively generate data based on user arguments, allowing for focused testing scenarios.3. API Integration (api/app.py): RogueDB incorporates a FastAPI application, providing a RESTful interface for programmatic interaction with the generated testdata.db. This API offers endpoints for listing tables, executing SELECT queries (with rudimentary safety checks), and retrieving data from specific tables, thereby enabling external applications to consume the synthetic data.4. Interactive Interface (colab/RogueDBColabNotebook.ipynb): For ease of use and accessibility, particularly in educational or rapid prototyping contexts, RogueDB includes a Google Colab notebook. This notebook integrates an ipywidgets-powered Graphical User Interface (GUI) for selecting data types to be generated. Furthermore, it facilitates the local launch of the FastAPI API within the Colab environment, exposing it via a public ngrok URL for immediate access to interactive API documentation (Swagger UI).The overall workflow begins with user configuration in the Colab notebook, proceeds through data generation and database population, and culminates in direct SQL querying capabilities within the notebook or via the exposed FastAPI.

ApplicationsRogueDB serves a multifaceted role across several domains:- Application Testing: It provides a controlled environment for validating application logic against varied data, crucial for ensuring software robustness without exposing sensitive production data.- Proof-of-Concept and Prototyping: Developers can rapidly instantiate populated databases to test new ideas or demonstrate features, significantly reducing setup overhead.- Educational Use: Its straightforward environment makes it an ideal tool for students and practitioners to learn and practice SQL queries in a risk-free setting.- API Development and Testing: The integrated FastAPI enables developers to build and test applications that consume data from a live, temporary SQL database.- Quick Benchmarking: The framework can be utilized for preliminary performance assessments of database interactions on small-scale datasets.

ConclusionRogueDB represents a valuable tool for software development and education, offering an efficient and accessible method for generating temporary, diverse, and structured synthetic SQL databases. By abstracting the complexities of data generation and providing both GUI and API interfaces, it democratizes access to high-quality test data, thereby accelerating development cycles, improving testing methodologies, and enhancing pedagogical approaches to database management. Its design emphasizes ease of deployment, particularly within cloud-based environments like Google Colab, positioning it as a practical solution for modern development challenges.

© 2025 Shiy Sabiniano